Book recommendation system¶

Original data file is obtained from "https://www.kaggle.com/jealousleopard/goodreadsbooks". This does not include descriptions of the books.

Using API from GoodReads.com, description of the books is obtained where avaialable. It is saved into 'books_extensive.pkl'

Book recommendation system is implemented based on following concepts,

- A simple search engine using 'Levenshtein Distance'

- Content based recommender system: Using description of the books (synopsis of the book + title + first author), obtain book-book similarity coefficients,

- Using user ratings for certain books, obtain a User-Book matrix.

- Perform matrix factorization to reduce User-Book matrix into a lower number of latent components, and predict ratings for unseen books. Here, to predict the ratings we use book-book similarity coefficients as weights,

import pandas as pd

import goodreads as gr

from goodreads import client

import string

import pylab as plt

import numpy as np

import regex as re

import datetime

import time

import seaborn as sns

import fuzzywuzzy as fuzzy

from fuzzywuzzy import process

sns.set()

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.cluster import KMeans, SpectralClustering

from sklearn.metrics import silhouette_samples, silhouette_score

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.decomposition import NMF, LatentDirichletAllocation, SparsePCA, PCA, TruncatedSVD

from nltk.stem.porter import PorterStemmer

from sklearn.metrics.pairwise import linear_kernel, cosine_similarity

%matplotlib inline

#inline

#notebook

Some Data cleaning¶

- Filtered for only English books

- Only first author's name is kept

- Combined rating is obtained by Log(avg_rating*total_ratings), negatives set to 0.

- Using API, description and popular shelves of the books is pulled and stored.

- ISBN and ISBN13 are corrected (mostly)

- Publication_date is replaced with year only

- Total 10544 books

all_books_data = pd.read_pickle(r"DataScience\Proj\Projects\books\data\goodreadsData\books_extensive.pkl")

all_books_data.head()

Data clearning is necessary¶

However, for this task we will only focus on the columns = bookID, title, author, description

all_books_data.isna().sum()

all_books_data.columns = ['book_id', 'title', 'authors', 'average_rating', 'isbn', 'num_pages',

'ratings_count', 'text_reviews_count', 'publication_date', 'publisher',

'combo_rating', 'description', 'popular_shelves', 'similar_books',

'isbn13']

Missing descriptions replace with title¶

all_books_data.description = all_books_data.description.fillna(all_books_data.title)

all_books_df1 = all_books_data[['book_id', 'title', 'authors', 'description']]

all_books_df1 = all_books_df1.drop_duplicates(subset=['title'], keep='first')

all_books_df1.shape

Merge 'description', 'title', 'authors' --> one 'all_text' feature¶

all_books_df1['all_text'] = all_books_df1[['description', 'title', 'authors']].agg(' '.join, axis=1)

Using TF-IDF extract and vectorize key words¶

- Using the NLTK corpus, drop typical stop words such as a,the, however, although etc.

- Drop alphanumeric words as well.

- Limit total words to 10000.

# Create list of word by removing numeric/alphanumeric elements

vectorizer = CountVectorizer(stop_words='english', max_features=10000)

# #tfidf = TfidfVectorizer(stop_words='english', max_features=10000)#, tokenizer=stemming_tokenizer)

word_list = []

# #Tokenize description

for i, row in all_books_df1.all_text.astype('str').iteritems():

X_tfidf = vectorizer.fit_transform(row.split(' '))

words = vectorizer.get_feature_names()

word_list.append([x for x in words if not any(c.isdigit() for c in x)])

#word_list.append(words)

all_books_df1['all_text'] = word_list

print("Example, list of words from first book:\n")

print(np.array(all_books_df1.all_text[0]))

With 10000 unique words, represent each book as a vector in this 10000 dimensional space. Use 'Term-Frequency' and 'Inverse-Document-Frequency' method for scoring words in a book.

- Term-frequency (TF) => how frequent a word occurs within that book's description.

- Inverse-Document-Frequency (IDF) => how frequent a word occurs in different book descriptions; higher the occurance, lower the score.

- Score F = TF*IDF

vectorize_feature = all_books_df1.all_text.astype('str')

tfidf = TfidfVectorizer(max_features=10000, stop_words='english' )#, tokenizer=stemming_tokenizer)

X_tfidf = tfidf.fit_transform(vectorize_feature).toarray()

tfidf_feature_names = tfidf.get_feature_names()

print("Total number of books x total words in all books")

print(X_tfidf.shape)

Create a quick book-search method¶

- Give any input keywords, either relevant to the title or author.

- Obtain most matching title from the list using Levenshtein Distance.

tit_auth = all_books_df1[["title", "authors"]].agg(' '.join, axis=1).str.lower()

def getMostMatchingTitle(keywords="harry potter"):

matout = fuzzy.process.extractBests(keywords.lower(), tit_auth, limit=1)

idx = matout[0][2]

matching_title = all_books_df1.title[idx]

return matching_title

Example:

getMostMatchingTitle(keywords="harry potter")

Predict most similar books: Book-book similarity¶

The similarity coefficients are calculated by using the text description of the books.

Using the books matrix (B) of n_books x n_words (i.e. 9808 books x 10000 words), calculate the similarity coefficient between book_i and book_j as below.

$ s_{ij} $ = $ \frac{B^{T}_{i} \cdot B_{j} }{ \| F_{i}^{2} \| \cdot \| F_{j}^{2} \|}$

description_cosine_mat = linear_kernel(X_tfidf, X_tfidf)

cosine_books_df = pd.DataFrame(description_cosine_mat, index = all_books_df1.book_id, columns=all_books_df1.book_id)

cosine_books_df.shape

# Function that get book recommendations based on the cosine similarity score of book's description

def similar_books(title, n=5, bid=None):

if bid is None:

bid = all_books_df1.book_id[all_books_df1.title == title]

else:

bid = bid

sim_scores = cosine_books_df.loc[bid].squeeze()

sim_scores.sort_values(ascending=False, inplace=True)

#sim_idxs = np.argsort(sim_scores) #indices of values from max to min

sim_ids = sim_scores.index.values[1:n+1]

#ids = []

#for i in sim_idxs:

# ids.append(all_books_df1.iloc[i].book_id)

#

prediction = all_books_df1[["title", "authors"]][all_books_df1.book_id.isin(sim_ids)]

return prediction

Find the books which similar to the given book. You can either specify a book or some keywords which will describe a book. For example, lets find a book that matches the given key words 'jane austin', and get the suggestion list of most similar books.

keywords = "jane austen"

matching_title = getMostMatchingTitle(keywords)

print("Input keywords: ", keywords)

print("================")

print("Most matching title for give keywords is:", matching_title)

print("================")

print("top n books similar to above book based on book-book similarity coefficient:")

similar_books(matching_title, 15)

Recommender system based on User-Book matrix¶

- Using user ratings information for certain books, obtain a User-Book matrix.

- Combine text description of the books, and user ratings, to form a User-Book matrix.

- A small subset of books have ratings. Keep only those books which are rated by the users.

- Keep only those users that have rated the books in the above subset.

Read user ratings data.

ratings = pd.read_csv(r"C:\Users\jbelapur\Documents\Extra_activities\DataScience\Proj\Projects\books\data\goodreadsData\kaggle\ratings.csv")

ratings.head()

Not all books have been rated. Keep only those books which are rated by the users.

sub_book_ids = np.intersect1d(all_books_df1.book_id.unique(), ratings.book_id.unique())

sub_book_ids.shape

There are total 2356 books which have ratings.

sub_books = all_books_df1[all_books_df1.book_id.isin(sub_book_ids)]

sub_books.shape

Keep those users who have rated only those books in the above list.

subdata_ratings = ratings[ratings.book_id.isin(sub_book_ids)]

sub_users = subdata_ratings.user_id.unique()

print("Number of books with ratings: ", subdata_ratings.book_id.nunique())

print("Number of total users who have rated at least 1 of the above books: ", subdata_ratings.user_id.nunique())

print("============================================")

print("Example, books rated by user_id 2338: ")

subdata_ratings[subdata_ratings.user_id.isin([2338])]

Content based recommendations for a user¶

- Get the top 3 highly rated books by a given user.

- Using book-book similarity coefficients, get a suggestions list for each of the boooks above.

def getTopRatedBookIDsForUser(uderid=2338):

print("User ID: ", uderid)

user_df = subdata_ratings[subdata_ratings.user_id.isin([uderid])]

user_df = user_df.sort_values(by=['rating'], ascending=False)

sorted_books_ids = user_df.book_id

#sorted_books_ids = user_df.book_id[user_df.rating >= 3]

return sorted_books_ids

def recommendedBooks(userid=2338):

# Get books rated by the user

sorted_books_ids = getTopRatedBookIDsForUser(uderid=userid)

matching_titles = sub_books[['title', 'book_id']][sub_books.book_id.isin(sorted_books_ids)]

#matching_titles = sub_books.title[sub_books.book_id.isin(sorted_books_ids)]

print("Top 3 books liked by user: \n" )#, np.array(matching_titles[:3])[:,None])

print(matching_titles.head(3))

print("-----")

prediction = pd.DataFrame()

for title in matching_titles.title:

prediction= prediction.append(similar_books(title, 2))

prediction.drop_duplicates(subset=['title'], keep="first", inplace=True)

prediction = prediction[~prediction.title.isin(matching_titles)]

return prediction

recommendedBooks(2338)

There are 42000 users, and 2356 books. Due to the computational limitations and memory issues, I can not process such a large matrix, therefore I will limit the number of users to 10000.

Randomly select 10000 users.

random_10kids = np.random.choice(subdata_ratings.user_id.unique(), 10000, replace=False)

subdata_10kusers = subdata_ratings[subdata_ratings.user_id.isin(random_10kids)]

subdata_10kusers.shape

subdata_10kusers.head(5)

Using TF-IDF extract and vectorize key words¶

- Drop typical stop words such as a,the, however, although etc.

- Drop alphanumeric words as well.

- Limit total words to 10000.

# Create list of word by removing numeric/alphanumeric elements

vectorizer = CountVectorizer(stop_words='english', max_features=10000)

# #tfidf = TfidfVectorizer(stop_words='english', max_features=10000)#, tokenizer=stemming_tokenizer)

word_list = []

# #Tokenize description

for i, row in sub_books.all_text.astype('str').iteritems():

b_tfidf = vectorizer.fit_transform(row.split(' '))

words = vectorizer.get_feature_names()

word_list.append([x for x in words if not any(c.isdigit() for c in x)])

#word_list.append(words)

sub_books['all_text'] = word_list

print("Example, list of words from first book:\n")

print(np.array(sub_books.all_text.iloc[0]))

vectorize_feature = sub_books.all_text.astype('str')

tfidf = TfidfVectorizer(max_features=10000, stop_words='english' )#, tokenizer=stemming_tokenizer)

Xb_tfidf = tfidf.fit_transform(vectorize_feature).toarray()

tfidf_feature_names = tfidf.get_feature_names()

print("Total number of books x total words in all books")

print(Xb_tfidf.shape)

Create cosine similarity matrix¶

sb_description_cosine_mat = linear_kernel(Xb_tfidf, Xb_tfidf)

sb_cosine_books_df = pd.DataFrame(sb_description_cosine_mat, index = sub_books.book_id, columns=sub_books.book_id)

sb_cosine_books_df.shape

sb_cosine_books_df.head()

Create Users-Books matrix¶

User-Book matrix (A), each row represents a user and each column represents a book and each cell represents rating given by that user to that book. Here, $A_{ij}$ is the rating given by a user ui on book $b{j}$. $A_{ij}$ can range from 1 to 5.

user_mat = subdata_10kusers.pivot_table(index="user_id", columns="book_id", values="rating", aggfunc = np.mean) #fill_value=0

user_mat.shape

user_mat.head(5)

Fill up ratings for unseen books by a user¶

Most of the books have missing ratings (i.e. NaN values). The task is to predict and fill up the ratings for the unread books for a user. First, we fill up ratings using average rating by all the users i.e. mass popularity.

# First guess for the rating is, average rating by all the users

user_mat_filled = user_mat.replace(np.nan, user_mat.mean(axis=0, skipna=True))

user_mat_filled.head()

#df = pd.DataFrame({'a': [1,2,3,4,0,0,0,0], 'b': [2,3,4,6,0,5,3,8]})

#df=df.replace(0,df.mean(axis=0))

#df.head(10)

Estimate ratings using book-book similarity coefficients: $ \hat{r}_{ub} = \frac{ \Sigma_{i \epsilon B_{u}} r_{ui} s_{ib} }{ \Sigma_{i \epsilon B_{u}} s_{ib}}$¶

Using book-book similarity coefficients as weights to predict ratings of unseen books. Where B_u is the list of books user u already rated.

#idx = 47

for idx in user_mat_filled.index:

#u = user_mat_filled.loc[idx]

#Books rated by user u

books_by_ui = pd.DataFrame(user_mat.loc[idx][user_mat.loc[idx] > 0])

#unseen books for user u

unread_by_ui = np.setdiff1d(user_mat_filled.columns, books_by_ui.index.values)

r_values = []

for b in unread_by_ui:

cos_values = sb_cosine_books_df.loc[b][list(books_by_ui.index.values)]

cos_values[cos_values == 0.0] = 0.0001

#r_val = np.average(cos_values, weights=books_by_ui.values.flatten())

r_val = np.average(books_by_ui.values.flatten(), weights=cos_values)

#mean of popular rating and weighted rating

r_val = 0.5*(r_val + user_mat_filled.loc[idx][b])

user_mat_filled.loc[idx][b] = r_val

user_mat_filled.head()

Matrix factorization method¶

- Extract the latent factors of the User x Books matrix, such that, these latent factors capture most of the information for predicting the user ratings.

- The User-Book matrix is decomposed into two matrices, (1) User x n_components, and (2) n_components x Books.

n_comps = 10

# Run truncated-SVD

modeltsvd = TruncatedSVD(n_components=n_comps)

tsvd_mat = modeltsvd.fit_transform(user_mat_filled)

tsvd_mat.shape

modeltsvd.components_.shape

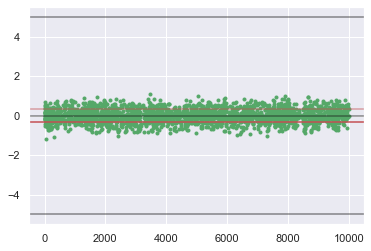

Test the difference between ratings predicted by matrix factorization and the actual ratings from User x Book matrix. For a given user, plot difference of ratings for all the books. Notice the narrow spread in differences, (red line indicating $\pm \sigma$ )

predicted_mat = np.dot(tsvd_mat,modeltsvd.components_)

predicted_mat.shape

diff_ratings = user_mat_filled.iloc[0] - predicted_mat[0,:]

sig_spread = diff_ratings.std()

plt.figure()

plt.plot(user_mat_filled.iloc[0] - predicted_mat[0,:],'g.')

plt.axhline(5,color='k', alpha=0.5)

plt.axhline(sig_spread, color='r', alpha=0.5)

plt.axhline(0,color='k', alpha=0.5)

plt.axhline(-sig_spread, color='r')

plt.axhline(-5,color='k', alpha=0.5)

Recommend top 10 books based on predicted ratings¶

Example, for a given user find the top recommendations.

predicted_mat_df = pd.DataFrame(predicted_mat, index=user_mat.index.values, columns=sub_books.book_id.values)

# Get recommendations for a given user using User-Book matrix

idx = 61

books_by_ui = pd.DataFrame(user_mat.loc[idx][user_mat.loc[idx] > 0])

unread_by_ui = np.setdiff1d(user_mat_filled.columns, books_by_ui.index.values)

reco_ids = predicted_mat_df.loc[idx][unread_by_ui].sort_values(ascending=False)

print("Book rated by the user: ")

sub_books[['title', 'book_id']][sub_books.book_id.isin(books_by_ui.index)]

Books recommended for the user:

sub_books[['title', 'book_id']][sub_books.book_id.isin(reco_ids.index)][:5]